IN THIS ARTICLE

Over the past few years, there has been increasing attention placed into speech and voice enabling technologies, whether for convenience, accessibility, or safety/hands-free driving and machine operation. As devices such as Amazon Echo that operate entirely through voice control gain popularity, user behavior and expectations are shifting from web-based to voice-based user experiences in many everyday settings. Siri’s voice control is available throughout the Apple hardware ecosystem: on iPhone, iPad, Apple Watch, Apple TV, CarPlay, and now, with the advent of macOS Sierra, to laptops and desktops. “Google Now” has similarly expanded from Android phones and tablets to Chromebooks, Android Wear, Android TV, Android Auto, and the brand new Google Home initiative. Microsoft includes text-to-speech and speech-to-text features into Surface-based tablets and PCs, brings voice features to the forefront with Cortana in Windows 10, and is developing a new connected car initiative with Windows Embedded Automotive 7.

Seeing a pattern yet? It seems as though anywhere a device may operate, voice control, dictation and text-to-speech features are becoming an important, if not primary, means of user interaction. This requires always-on data connections, as the bulk of non-specialized voice recognition still takes place in cloud services. (Over time, more and more speech SDK’s are allowing disconnected operation, if only on specialized data sets.) In this article, we’ll explore the major providers and SDKs out there and lay the groundwork for upcoming articles on Voice Commands, Speech Recognition and Text-to-Speech.

It might be worth taking a moment to explain what we’re talking about when we mention Voice Control, Dictation, and Text-To-Speech. Voice Control refers to the ability of a device to respond to spoken commands, typically using a wake phrase and specialized/restricted language for the commands, such as “OK Google, directions from Boston to New York” on an Android device using Google Now. Dictation refers to the ability to enter free text using spoken word only. This is more challenging because it typically requires language selection (English versus Spanish, Japanese, etc), has higher complexity without a restricted vocabulary, requires knowledge of key words and phrases to enter punctuation and formatting instructions, and is typically impractical to edit by voice after the fact. Lastly, Text-To-Speech refers to the ability of a device to turn written text into audio of the spoken words. Text-To-Speech typically has challenges due to pronunciation errors in the speech engine, language detection/selection, placement of emphasis, detection of inline entities (such as addresses, abbreviations, acronyms or code snippets), and monotony of robotic voices.

As voice-controlled and speech-enabled features gain popularity, there are 3 primary infrastructure requirements that come to mind. The first is High Availability. For services that provide critical services to connected vehicles and homes, there can be no downtime. When all HVAC, doors, lights, security systems and windows are controlled by Amazon Echo’s “Alexa,” that means Alexa must always have a data connection! Secondly, High Performance is a must. Since recording and encoding/decoding speech are latency-critical operations (especially on slower, bandwidth-constrained mobile data networks), response time is critical for user experience and building trust. Lastly and perhaps most importantly, High Security is essential. These services carry private user speech commands and data that controls cars and homes, so there must be clear capabilities for locking down and controlling access to authorized users and applications.

These 3 requirements, Availability, Performance, and Security, are exactly where the PubNub Data Stream Network comes into the picture. PubNub is a global data stream network that provides “always-on” connectivity to just about any device with an internet connection (there are now over 70+ SDKs for a huge range of programming languages and platforms). PubNub’s Publish-Subscribe messaging provides the mechanism for secure channels in your application, where servers and devices can publish and subscribe to structured data message streams in real-time: messages propagate worldwide in under a quarter of a second (250ms for the performance enthusiasts out there).

Now that we have an idea of the overall landscape of Voice Activation, let’s take a look at some of the most noteworthy offerings from 4 top voice-enabled technology stacks.

Amazon Echo

The Amazon Echo and Echo Dot are internet-connected home- and office-based devices that contain a specialized microphone array (for optimized beam-forming voice isolation) and speaker. By speaking a phrase starting with the wake word (usually “Alexa”), users are able to perform web searches, ask for facts, play music and listen to audio books (of course), reorder items on Amazon (ditto), as well as an ever-expanded list of tasks called skills in the Echo ecosystem (like ordering a pizza, checking a bank balance or retrieving stock quotes). This summer, one article placed the estimate of Echo installation base at around 4MM units.

One of the most interesting aspects of the Amazon Echo ecosystem is the ability for developers to create new skills using JavaScript and JSON definitions on the AWS Lambda platform. This growth of “serverless” technologies emphasizes small, easy-to-digest code snippets so that stateless compute resources may be scaled completely on-demand. There is also a skills certification process (similar to Apple App Store review) so that skills using advanced capabilities (such as purchasing items using real funds, or having the ability to unlock/disarm a device) are deployed in a safe and trustworthy way.

For folks out there who would like to go beyond Voice Commands on Echo, there are some reports that Echo will soon have Voice Notifications using text-to-speech on the device.

That said, there are still key limitations of the Amazon Echo platform. There is no physical screen on the device (just a blue activation light ring), so any complicated setup must take place by alternative means (such as the Alexa mobile app).

Apple Siri

Since Siri debuted on iPhone 4S in 2011, it has spread throughout the Apple hardware ecosystem to iPad, Apple Watch, Apple TV, CarPlay and macOS Sierra. It provides voice activation of a large and growing number of services on the iOS/macOS platform. Many users are already familiar with using Siri for web searches, navigation, setting up alarms/timers/reminders and so on (the full list is pretty extensive). The Apple SDK for Siri extensions is aptly named SiriKit, and allows tie-ins to Ride Hailing, Messaging, Photo Search, Payments, VoIP Calling and Workouts. In recent iPhone releases, Siri has been extended to handle detection of the “Hey Siri” wake phrase to respond to commands even when locked.

Since Siri debuted on iPhone 4S in 2011, it has spread throughout the Apple hardware ecosystem to iPad, Apple Watch, Apple TV, CarPlay and macOS Sierra. It provides voice activation of a large and growing number of services on the iOS/macOS platform. Many users are already familiar with using Siri for web searches, navigation, setting up alarms/timers/reminders and so on (the full list is pretty extensive). The Apple SDK for Siri extensions is aptly named SiriKit, and allows tie-ins to Ride Hailing, Messaging, Photo Search, Payments, VoIP Calling and Workouts. In recent iPhone releases, Siri has been extended to handle detection of the “Hey Siri” wake phrase to respond to commands even when locked.

For users who wish to go beyond Siri, iOS and macOS also offer SDKs for Speech Recognition and Speech Synthesis (text-to-speech). This enables the creation of compelling messaging and social apps on the iOS and macOS platforms. Many of these technologies such as WatchOS and CarPlay are still in their infancy, so it remains to be seen what the most compelling use cases will be for voice control, dictation and text-to-speech in these ubiquitous computing environments. Another key limitation for developers is that although this technology stack offers powerful capabilities, it is still restricted to the Apple ecosystem and requires substantial effort to address the Android market for large-scale audiences.

Microsoft Cortana

For completeness, it is worth noting that Microsoft Cortana is the Microsoft Personal Assistant on Windows 10 phone, tablet and PC platforms.

For completeness, it is worth noting that Microsoft Cortana is the Microsoft Personal Assistant on Windows 10 phone, tablet and PC platforms.

It allows users to perform navigation, make calls, play a song, set reminders, and more (the full list of commands is similarly extensive to Apple and Google technologies). It offers an API to allow developers to create Cortana Actions for custom commands as well as structured data markup similar to Google Now cards.

For developers who wish to go beyond Cortana, Microsoft offers several Cognitive Services for Speech Recognition, and Speech Synthesis (as well as other use cases such as knowledge search and image search). Altogether, these provide a useful set of tools for developers who wish to target the Microsoft technology stack. Similar to the other platforms listed above, there is substantial effort required to maintaining several platform-specific native applications.

Google Now and Voice Actions

Google Now is Android’s answer to Apple’s Siri technology – it provides voice search and commands, as well as “Now cards” that provide information in a context-specific format to simplify the user experience. It has recently been announced as being extended into a full-fledged platform called Google Assistant. Google Now has a similar set of voice commands as Siri, including web searches, navigation, setting up timers/alarms/reminders (the full list of Google Now commands is also pretty extensive).

If Google Now commands are not enough, Android offers SpeechRecognizer SDK and Text-To-Speech services. This enables application developers to integrate dictation and reading services into applications. In our testing, we found Google Now to be more “hesitant” and slightly less convenient than Siri in processing voice commands. For example, the “navigate from Boston to New York” command brought up a Google Now card that required additional clicks, versus the Siri experience of opening Maps and beginning navigation immediately. This limitation could really be seen either way however, as the definition of “correct” behavior is so context-dependent. Similar to the Siri technology stack, the Android technologies do not address the Apple hardware ecosystem, which leaves developers with substantial effort to support the two technologies for large-scale audiences.

Emerging Web Speech APIs

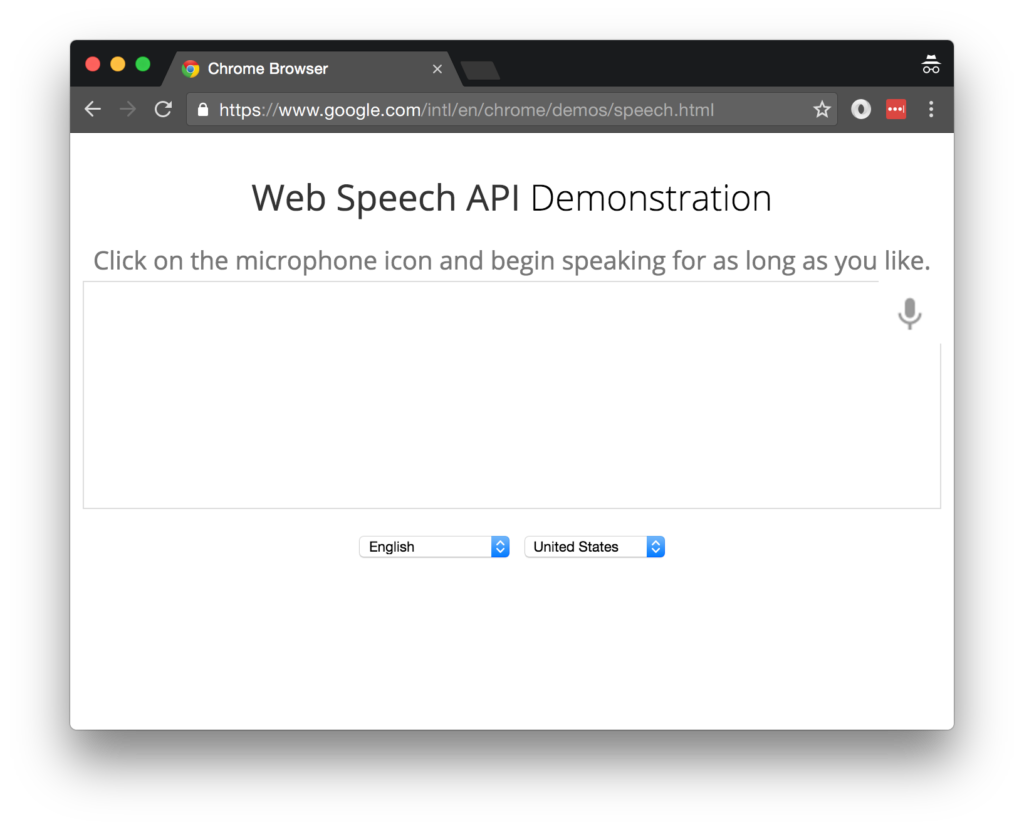

The above solutions work well in a single technology stack, but they require substantial effort and engineering resources to maintain across a diverse array of targeted platforms. In the meantime, there is one promising set of technologies that is worth highlighting to enable rapid prototyping across desktop and Android environments. The W3C has an emerging standard for Speech Synthesis and Speech Recognition.

The Chrome desktop and Android browsers (hopefully iOS mobile soon!) include support for these speech APIs. Using the APIs it’s possible to create applications with voice control, dictation and text-to-speech. Although they don’t allow the same level of user interface polish, integration and control as native APIs, we really enjoy using them for cases where rapid development is necessary and web applications suffice.

Since you’re reading this at PubNub, we’ll presume you have a realtime application use case in mind, such as ride-hailing, messaging, IoT or other. Then, in the sections below, we give examples for dictation, text-to-speech and voice control.

Getting Started with PubNub

The first things you’ll need before you can create a realtime application with PubNub are publish and subscribe keys from PubNub (you probably already took care of this if you already followed the steps in a previous article). If you haven’t already, you can create an account, get your keys and be ready to use the PubNub network in less than 60 seconds.

PubNub plays together really well with JavaScript because the PubNub JavaScript SDK is extremely robust and has been battle-tested over the years across a huge number of mobile and backend installations. The SDK is currently on its 4th major release, which features a number of improvements such as isomorphic JavaScript, new network components, unified message/presence/status notifiers, and much more. NOTE: for compatibility with the PubNub AngularJS SDK, our UI code will use the PubNub JavaScript v3 API syntax.

The PubNub JavaScript SDK is distributed via Bower or the PubNub CDN (for Web) and NPM (for Node), so it’s easy to integrate with your application using the native mechanism for your platform. In our case, it’s as easy as including the CDN link from a script tag.

That note about API versions bears repeating: the user interfaces in upcoming articles use the v3 API (since they need the AngularJS API, which still runs on v3). We expect the AngularJS API to be v4-compatible soon. In the meantime, please stay alert when jumping between different versions of JS code!

Speech-to-Text

With the new Speech Synthesis API, speaking text is quite easy:

// NOTE: assume this is Chrome desktop/Android specific code! var theText = "this is the text you wish to speak"; window.speechSynthesis.speak(new SpeechSynthesisUtterance(theText));

(Full working code is available as a CodePen and Gist.)

We simply create a new instance of a SpeechSynthesisUtterance with the given text, and send it off to the window.speechSynthesis.speak function for translation to audio. Note that in a production application, you’ll want to code more defensively and degrade gracefully on browsers that don’t support speech features.

Speech Recognition

Speech Recognition is a bit more complicated, but we can easily put together a simple demo like this:

// NOTE: assume this is Chrome desktop/Android specific code!

var theText = "";

var recognition = new webkitSpeechRecognition();

recognition.onresult = function (event) {

for (var i = event.resultIndex; i < event.results.length; i++) {

if (event.results[i].isFinal) {

theText += event.results[i][0].transcript;

}

}

};

recognition.start();

(Full working code is available as a CodePen and Gist.)

We simply create a new instance of the webkitSpeechRecognition class, install an onresult() handler function that appends each fragment of detected text to a string, and call the recognition.start(). Note that in a production application, you’ll want to code more defensively, potentially add onstart and onend handlers, and degrade gracefully on browsers that don’t support speech features.

Voice Commands

Voice commands are similar to Speech Recognition, except that we take the recognized speech input, tokenize (parse) it, and compare it to a list of commands that the application supports. To avoid all the details of phrase parsing, we’ll provide a representative example of voice command handling in an upcoming article.

Conclusion

Thank you so much for joining us in this first article of the Voice Activation series! Hopefully it’s been a useful experience learning about voice-enabled technologies. In future articles, we’ll dive deeper into the Speech APIs and use cases for realtime web applications.

Stay tuned, and please reach out anytime if you feel especially inspired or need any help!