IN THIS ARTICLE

Subscribe to Our Newsletter

Business intelligence has evolved dramatically as a system over time. As a result of this evolution, along with the exponential development of real-time technology, real-time business intelligence has emerged as one of the most innovative and effective business intelligence systems out there today.

Real-time business intelligence tools process, analyze, and deliver business information as it happens. Early business intelligence involved manually analyzing information or pulling data and reports from historical OLAP clusters. Today with real-time business intelligence tools, users have immediate access to data and can take advantage of business events as they happen. With real-time information, decisions can be made as they happen, and decision makers can be proactive, rather than reactive. From financial decisions, to inventory management, to sales figures reports, real-time business intelligence has taken business decision making to the next level.

But behind every great real-time business intelligence tool is a data stream network, not only to power the real-time functionality in the tool, but to ensure reliability, low latency, and scalability. That data stream network puts both the real-time and intelligence in a business intelligence tool.

What kind of issues will a developer face when building a real-time business intelligence tool?

When dealing with big data, you’ll typically have a lot of machines that are operating in simultaneous conjunction, it becomes a distributed tracking and logging challenge, or at least a “what’s happening” challenge. These machines are very expensive, and if you’re a big company, with a lot of machines, coordinating all these machines in real-time can be problematic.

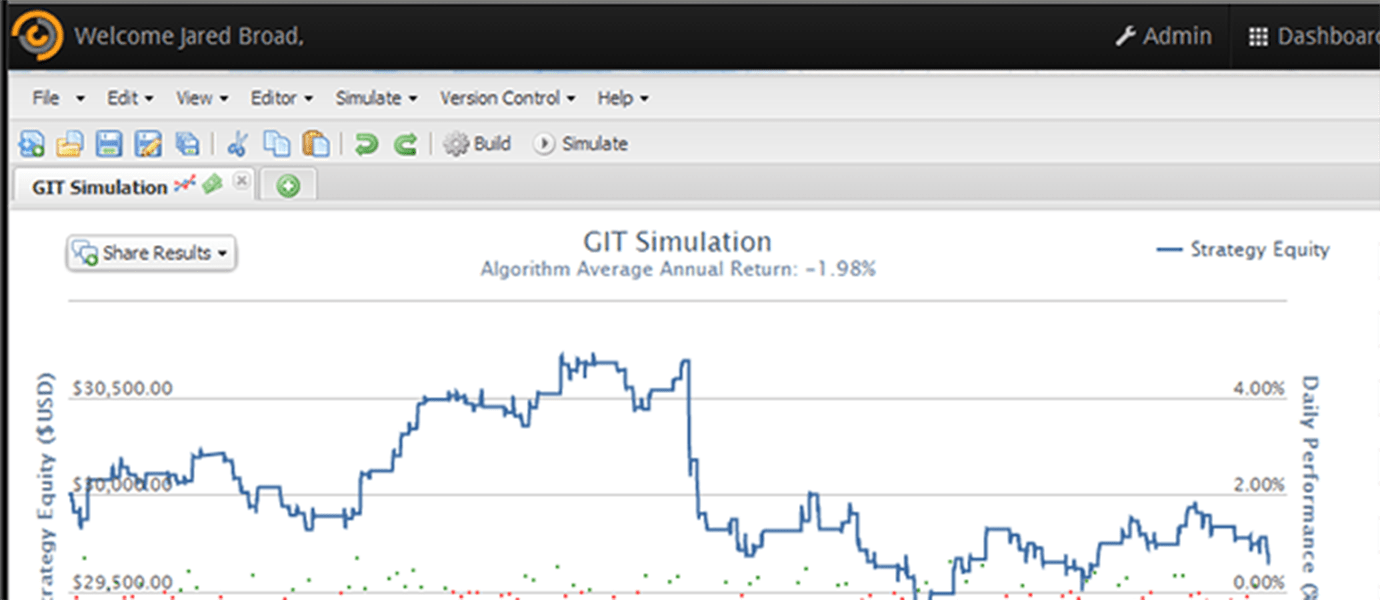

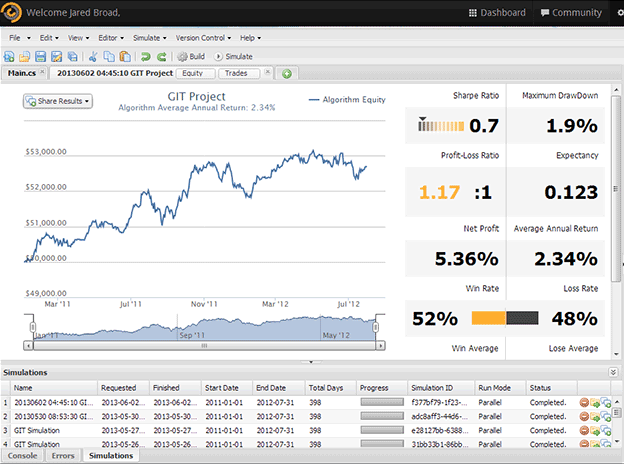

QuantConnect uses real-time to provide algorithm backtesting for investments. Users can watch the graph update in real-time.

You need a mechanism (a globally distributed, data stream network) that can communicate over the same bus, where you can have a dashboard of status updates in real-time from all machines in aggregate; what are they working on, were there any failures in processing, are there any thresholds that are being breached. These are things you can only get from a real-time system.

How would you architect something like this?

Say you have 700 systems with 40,000 or 50,000 CPUs running simultaneously, the problem becomes a coordination challenge between all these systems. Typically there are Online Analytical Processing systems (OLAP), that have several SQL clusters servers running, collecting aggregate sum results, and what you don’t know is how long a specific batch is going to take or if a specific run will be successful. In order to monitor the system and all the changes as they happen, a data stream network is required.

For example, if you’re business intelligence tool is tracking electrical usage of a neighborhood, it will be able to tell you in real-time: “Status 70% complete and 1,000 customers did X, Y, and Z, meter numbers are 400 watts of electricity consumed for neighborhood 72.”

The need for a data stream network

The development stage of a business intelligence tool is only 25% of the pain. A custom solution (like WebSockets or Socket.io) can deliver real-time information, however, it’s the other 75% that these custom solutions fall short: deployment and scaling. This is where using a real-time service provider trumps building a custom solution in-house.

Think about it in terms of the hardware that’s available, the amount of effort, and the engineering time required to keep a system like this up and running and coordinated with all the other systems. It becomes a major operational challenge.

When your business intelligence tool is business critical to your customers, any downtime could be disastrous for that business. The real-time functionality of a business intelligence tool needs to always be there and ready to go, without having to worry about any ongoing maintenance or considerations. It’s like “an always-on phone connection” that must be always-on and it comes down to the reliability of a data stream network vs. a custom built solution.

Business intelligence isn’t very intelligent if it’s not working.

The following are a couple of examples of different verticals that a business intelligence solution can be built around. They fall under the generic horizontal of big data, where you have tons of data with several machines coordinating with each other, you need a service that can help give you updates in real-time about what happens.

Electric companies: Electric companies have hundreds of thousands of meters that are consistently tabulating data. As the frequency rate increases of the sample of each neighborhood, we’re talking a boat load of data. Being able to see all that data in real-time becomes much easier when you have a data stream network that you can rely on. Additionally, when you’re processing the metering at the end of the month, aggregating that and being able to see it in real-time is key.

Social data: As users are going online, logging onto say, Facebook, hundreds of millions of people at a time, processing that much data is expensive and hard. With decisions being made as data is presented, having to wait hours, days or weeks for user data isn’t fast enough in the social media world.

How to use PubNub to power a Business Intelligence Tool

PubNub has OLAP hooks and SQL triggers that you can throw in under certain scenarios and then send out a summary message across the pipe, allowing you to receive it in dashboards and graphs. PubNub also has different SDKs that you can trigger at certain thresholds, from Python to PHP to Java, or even Hadoop if you’re doing big data with pig processing language, to trigger scenarios.

Below are a couple code snippets to help you get started. For more details, we have a more in-depth StackOverflow answer with additional code samples.

Stream Real-time Updates from OLAP MySQL Triggers via Stored Procedures

MySQL Trigger Code