IN THIS ARTICLE

We’re witnessing the meteoric rise in natural language processing as the mainstream embraces the technology in tandem with the widespread adoption of artificial intelligence. From the Google Speech API, to Siri, Alexa, and IBM Watson, we’re growing increasingly reliant on using NLP without even realizing it – playing music, scheduling meetings, and even controlling devices, the use cases are infinite.

In this tutorial, we dive into a simple example of how to convert text-to-speech in a realtime AngularJS web application with our IBM Watson Text-to-Speech BLOCK and 80 lines of HTML and JavaScript.

What and Why Text-to-Speech?

Text-To-Speech refers to the ability of a device to turn written text into audio of the spoken words. Text-to-Speech services can be useful in a variety situations, such as accessibility for users with different abilities, to provide audio instead visual output to avoid distracted driving, and other cases where a screen may not be present. Many customer service applications also use text-to-speech as part of inbound and outbound phone call processing. As these techniques gain popularity, user behavior and expectations are shifting from web-based to voice-based user experiences in many everyday settings.

Text-To-Speech typically has challenges due to pronunciation errors in the speech engine, language detection/selection, placement of emphasis, detection of inline entities (such as addresses, abbreviations, acronyms or code snippets), and monotony of robotic voices.

As these cognitive services gain traction in real-world situations, there are three primary infrastructure requirements that come to mind.

- High Availability. For services that provide critical services to connected vehicles and homes, there can be no downtime. When all HVAC, doors, lights, security systems and windows are controlled by devices, that means there must always be a data connection!

- High Performance is a must. Since recording and encoding/decoding speech are latency-critical operations (especially on slower, bandwidth-constrained mobile data networks), response time is critical for user experience and building trust.

- High Security is essential. These services carry private user communications and data that controls cars and homes, so there must be clear capabilities for locking down and controlling access to authorized users and applications.

These three requirements–Availability, Performance, and Security–are exactly where the PubNub Data Stream Network comes into the picture. PubNub is a global data stream network that provides “always-on” connectivity to just about any device with an internet connection (there are now over 70+ SDKs for a huge range of programming languages and platforms). PubNub’s Publish-Subscribe messaging provides the mechanism for secure channels in your application, where servers and devices can publish and subscribe to structured data message streams in realtime: messages propagate worldwide in under a quarter of a second (250ms for the performance enthusiasts out there).

Text-to-Speech and PubNub BLOCKS

In addition to those three strengths, we take advantage of BLOCKS, an awesome new PubNub feature that allows us to decorate messages with supplementary data. In this article, we create a PubNub BLOCK of JavaScript that runs entirely in the network and adds text-to-speech URI’s into the messages so that the web client UI code can stay simple and just use an audio tag for playback. With PubNub BLOCKS, it’s very easy to integrate third-party applications into your data stream processing. Here is a complete catalog of pre-made BLOCKS.

As we prepare to explore our sample AngularJS web application with text-to-speech features, let’s check out the underlying IBM Watson Text-to-Speech API.

IBM Watson Text-to-Speech API

Native solutions work well in a single technology stack such as iOS or Android, but they require substantial effort and engineering resources to maintain across a diverse array of targeted platforms. In the meantime, the IBM Watson Text-to-Speech API makes it easy to voice-enable your applications with straightforward WAV stream playback (or a variety of other formats).

Modern HTML5 browsers including Chrome, Firefox and Safari have support for the Web Media APIs. Using these APIs, it’s possible to create applications with audio and/or video playback. In our case, we send text to the IBM Watson Text-to-Speech API, receive a WAV file URL back, and pass the URL to an audio tag for playback.

Since you’re reading this at PubNub, we’ll presume you have a realtime application use case in mind, such as ride-hailing, messaging, IoT or other. In the sections below, we’ll dive into the text-to-speech use case, saving the other cognitive service use cases for the future.

Getting Started with PubNub

The first things you’ll need before you can create a realtime application with PubNub are publish and subscribe keys from PubNub (you probably already took care of this if you already followed the steps in a previous article). If you haven’t already, you can create an account, get your keys and be ready to use the PubNub network in less than 60 seconds.

- Step 1: go to the signup form.

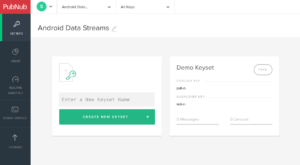

- Step 2: create a new application, including publish and subscribe keys.

The publish and subscribe keys look like UUIDs and start with “pub-c-” and “sub-c-” prefixes respectively. Keep these handy – you’ll need to plug them in when initializing the PubNub object in your JavaScript application.

- Step 3 (optional): make sure the application corresponding to your publish and subscribe key has the Presence add-on enabled if you’d like to use Presence features for tracking device connection state and custom attributes.

That’s it, nicely done!

About the PubNub JavaScript SDK

PubNub plays together really well with JavaScript because the PubNub JavaScript SDK is extremely robust and has been battle-tested over the years across a huge number of mobile and backend installations. The SDK is currently on its 4th major release, which features a number of improvements such as isomorphic JavaScript, new network components, unified message/presence/status notifiers, and much more. NOTE: for compatibility with the PubNub AngularJS V4 SDK, our UI code uses the PubNub JavaScript v4 API syntax.

The PubNub JavaScript SDK is distributed via Bower or the PubNub CDN (for Web) and NPM (for Node), so it’s easy to integrate with your application using the native mechanism for your platform. In our case, it’s as easy as including the CDN link from a script tag.

That note about API versions bears repeating: the user interfaces in this series of articles use the v4 API (since they need the AngularJS API, which now runs on v4). Be aware of the transition from V3 to V4 as you consider different code samples, and please stay alert when jumping between different versions of JS code!

Getting Started with IBM Watson on BlueMix

The next thing you’ll need to get started with cognitive services is a BlueMix account to take advantage of the Watson APIs.

- Step 1: go to the BlueMix signup form.

- Step 2: go to the IBM Watson Text-to-Speech API page and add it to your service dashboard.

- Step 3: go to the “service credentials” pane of the service instance you just created and make note of the username and password.

All in all, not too shabby!

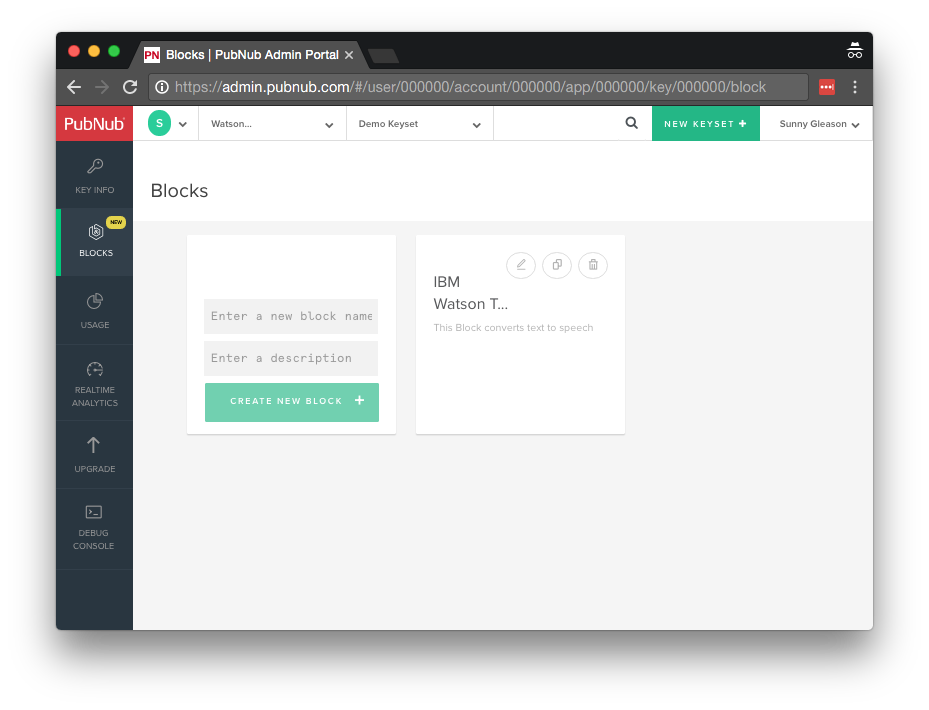

Setting Up the BLOCK

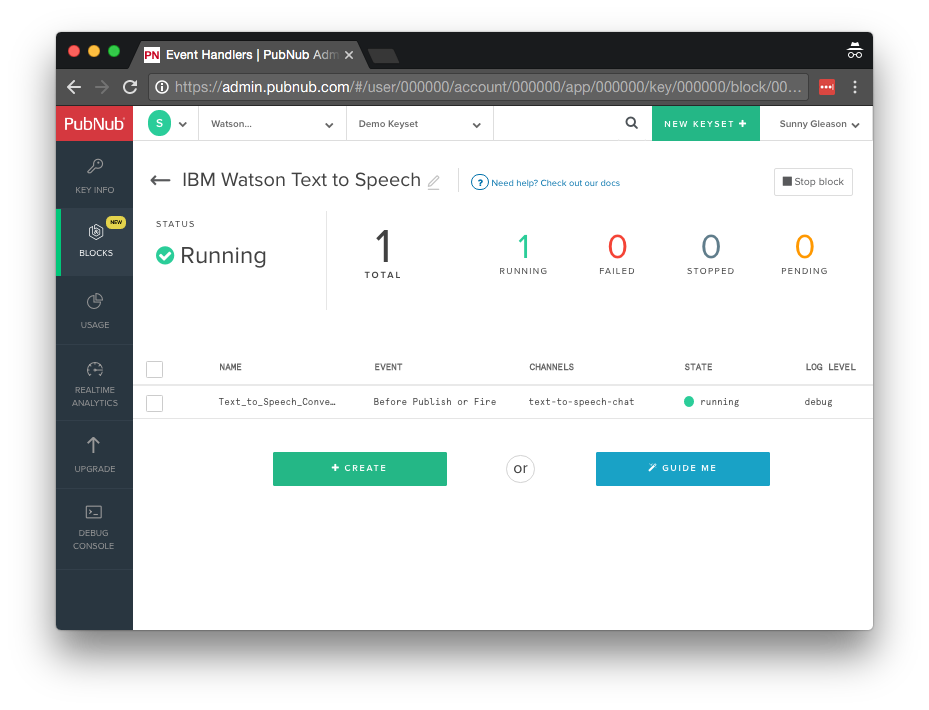

With PubNub BLOCKS, it’s really easy to create code to run in the network. Here’s how to make it happen:

- Step 1: go to the application instance on the PubNub admin dashboard.

- Step 2: create a new BLOCK.

- Step 3: paste in the BLOCK code from the next section and update the credentials with the Watson credentials from the previous steps above.

- Step 4: Start the BLOCK, and test it using the “publish message” button and payload on the left-hand side of the screen.

That’s all it takes to create your serverless code running in the cloud!

Coding the BLOCK

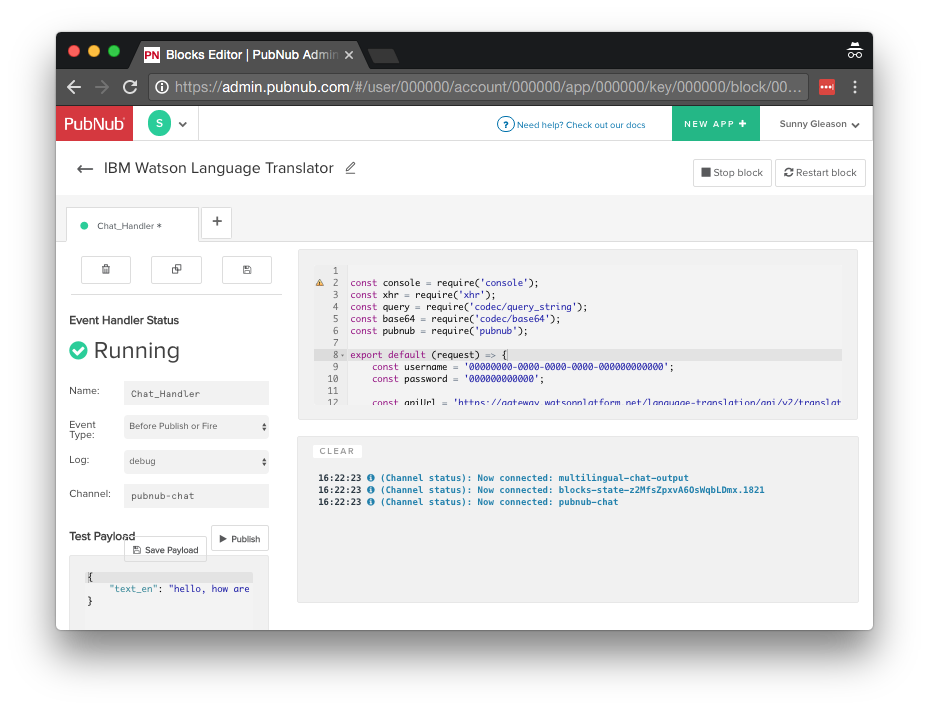

You’ll want to grab the 60 lines of BLOCK JavaScript and save them to a file, say, pubnub_watson_tts.js. It’s available as a Gist on GitHub for your convenience.

First up, we declare our dependencies: console (for debugging), xhr (for HTTP requests), store (for Key-Value storage), query (for query string encoding), and auth (for HTTP Basic auth support).

const console = require('console');

const xhr = require('xhr');

const store = require('kvstore');

const query = require('codec/query_string');

const auth = require('codec/auth');

Next, we create a method to handle incoming messages, declare the credentials for accessing the IBM Watson service, and set up the URLs for talking to the remote web service.

export default (request) => {

// watson api token

const username = '00000000-0000-0000-0000-000000000000';

const password = 'AAAAAAAAAAAA';

const apiUrl =

'https://stream.watsonplatform.net/text-to-speech/api/v1/synthesize';

// token url

const tokenUrl = 'https://stream.watsonplatform.net/authorization/api/v1/token?url=https://stream.watsonplatform.net/text-to-speech/api';

Creating a web service authorization token is a heavyweight operation, so we cache the token in the PubNub BLOCKS Key-Value store. If it’s there, we use it, if not, we’ll populate it below.

return store.get('watson_token').then((watsonToken) => {

watsonToken = watsonToken || { token: null, timestamp: null };

We’ll be returning an ok() response since we’re just passing the decorated structured data message along (after modifications, if applicable).

let response = request.ok();

If the token is null or expired, we request a new one from the auth token API and cache it in the KV service.

if (watsonToken.token === null ||

(Date.now() - watsonToken.timestamp) > 3000000) {

const httpOptions = {

as: 'json',

headers: {

Authorization: auth.basic(username, password)

}

};

response = xhr.fetch(tokenUrl, httpOptions).then(r => {

watsonToken.token = decodeURIComponent(r.body);

watsonToken.timestamp = Date.now();

store.set('watson_token', watsonToken);

Once we have the token, we create a valid Text-to-Speech service request URL and decorate the message “speech” attribute with the URL. In our UI, we’ll use this URL for WAV file playback.

if (watsonToken.token) {

const queryParams = {

accept: 'audio/wav',

voice: 'en-US_AllisonVoice',

text: request.message.text,

'watson-token': watsonToken.token

};

request.message.speech = apiUrl + '?' + query.stringify(queryParams);

}

return request.ok();

},

e => console.error(e.body))

.catch((e) => console.error(e));

Likewise, if the token is already present, we use it and return the Text-to-Speech URL in the “speech” attribute right away without having to consult the auth token service.

} else {

const queryParams = {

accept: 'audio/wav',

voice: 'en-US_AllisonVoice',

text: request.message.text,

'watson-token': watsonToken.token

};

request.message.speech = apiUrl + '?' + query.stringify(queryParams);

}

return response;

});

};

There are a few attributes in there that you can change for your application if you like; we’ll cover those later.

OK, let’s move on to the UI!

The User Interface

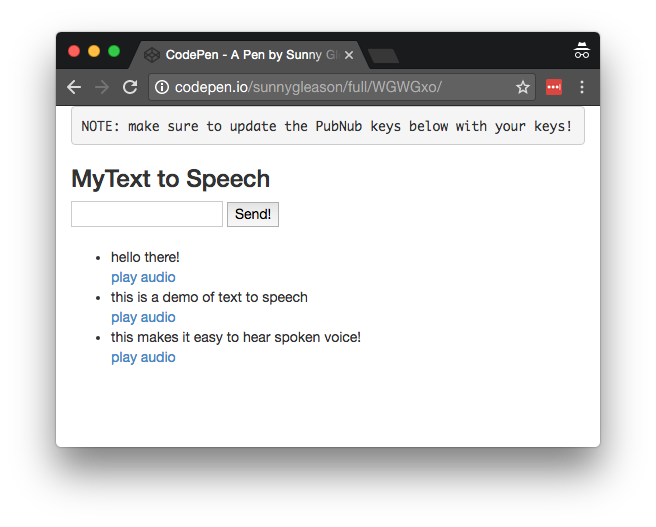

You’ll want to grab these 80 lines of HTML & JavaScript and save them to a file, say, pubnub_watson_tts.html.

The first thing you should do after saving the code is to replace two values in the JavaScript:

- YOUR_PUB_KEY: with the PubNub publish key mentioned above.

- YOUR_SUB_KEY: with the PubNub subscribe key mentioned above.

If you don’t, the UI will not be able to communicate with anything and probably clutter your console log with entirely too many errors.

For your convenience, this code is also available as a Gist on GitHub, and a Codepen as well. Enjoy!

Dependencies

First up, we have the JavaScript code & CSS dependencies of our application.

<!doctype html> <html> <head> <script src="https://code.jquery.com/jquery-1.10.1.min.js"></script> <script src="https://cdn.pubnub.com/pubnub-3.15.1.min.js"></script> <script src="https://ajax.googleapis.com/ajax/libs/angularjs/1.5.6/angular.min.js"></script> <script src="https://cdn.pubnub.com/sdk/pubnub-angular/pubnub-angular-3.2.1.min.js"></script> <script src="https://cdnjs.cloudflare.com/ajax/libs/underscore.js/1.8.3/underscore-min.js"></script> <link rel="stylesheet" href="//netdna.bootstrapcdn.com/bootstrap/3.0.2/css/bootstrap.min.css" /> <link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/font-awesome/4.6.3/css/font-awesome.min.css" /> </head> <body>

For folks who have done front-end implementation with AngularJS before, these should be the usual suspects:

- JQuery : gives us the ability to use easy JQuery selectors.

- PubNub JavaScript client: to connect to our data stream integration channel.

- AngularJS: were you expecting a niftier front-end framework? Impossible!

- PubNub Angular JavaScript client: provides PubNub services in AngularJS quite nicely indeed.

- Underscore.js: we could avoid using Underscore.JS, but then our code would be less awesome.

In addition, we bring in 2 CSS features:

- Bootstrap: in this app, we use it just for vanilla UI presentation.

- Font-Awesome: we love Font Awesome because it lets us use truetype font characters instead of image-based icons. Pretty sweet!

Overall, we were pretty pleased that we could build a nifty UI with so few dependencies. And with that… on to the UI!

The User Interface

Here’s what we intend the UI to look like:

The UI is pretty straightforward – everything is inside a div tag that is managed by a single controller that we’ll set up in the AngularJS code. That h3 heading should be pretty self-explanatory.

<div class="container" ng-app="PubNubAngularApp" ng-controller="MySpeechCtrl"> <pre> NOTE: make sure to update the PubNub keys below with your keys, and ensure that the text-to-speech BLOCK is configured properly! </pre> <h3>MyText to Speech</h3>

We put in an HTML5 audio tag that we can use to play the Text-to-Speech audio as it arrives.

<audio id="theAudio"></audio>

We provide a simple text input for a message to send to the PubNub channel as well as a button to perform the publish() action.

<input ng-model="toSend" /> <input type="button" ng-click="publish()" value="Send!" />

Our UI consists of a simple list of messages. We iterate over the messages in the controller scope using a trusty ng-repeat. Each message includes a link to allow the user to speak the text again.

<ul>

<li ng-repeat="message in messages track by $index">

{{message.text}}

<br />

<a ng-click="sayIt(message.speech)">play audio</a>

</li>

</ul>

And that’s it – a functioning realtime UI in just a handful of code (thanks, AngularJS)!

The AngularJS Code

Right on! Now we’re ready to dive into the AngularJS code. It’s not a ton of JavaScript, so this should hopefully be pretty straightforward.

The first lines we encounter set up our application (with a necessary dependency on the PubNub AngularJS service) and a single controller (which we dub MySpeechCtrl). Both of these values correspond to the ng-app and ng-controller attributes from the preceding UI code.

<script>

angular.module('PubNubAngularApp', ["pubnub.angular.service"])

.controller('MySpeechCtrl', function($rootScope, $scope, Pubnub) {

Next up, we initialize a bunch of values. First is an array of message objects which starts out empty. After that, we set up the msgChannel as the channel name where we will send and receive realtime structured data messages. NOTE: make sure this matches the channel specified by your BLOCK configuration!

$scope.messages = []; $scope.msgChannel = 'text-to-speech-chat';

We initialize the Pubnub object with our PubNub publish and subscribe keys mentioned above, and set a scope variable to make sure the initialization only occurs once. NOTE: this uses the v4 API syntax.

if (!$rootScope.initialized) {

Pubnub.init({

publishKey: 'YOUR_PUB_KEY',

subscribeKey: 'YOUR_SUB_KEY',

ssl:true

});

$rootScope.initialized = true;

}

The next thing we’ll need is a realtime message callback called msgCallback; it takes care of all the realtime messages we need to handle from PubNub. In our case, we have only one scenario – an incoming message containing text to speak, and the authorized text-to-speech URL. We push the message object onto the scope array and pass the URL to the sayIt() function for text-to-speech playback (we’ll cover that later). The push() operation should be in a $scope.$apply() call so that AngularJS gets the idea that a change came in asynchronously.

var msgCallback = function(payload) {

$scope.$apply(function() {

$scope.messages.push(payload);

});

$scope.sayIt(payload.speech);

};

The publish() function takes the contents of the text input, publishes it as a structured data object to the PubNub channel, and resets the text box to empty.

$scope.publish = function() {

Pubnub.publish({

channel: $scope.msgChannel,

message: {data:$scope.toSend}

});

$scope.toSend = "";

};

In the main body of the controller, we subscribe() to the message channel (using the JavaScript v4 API syntax) and bind the events to the callback function we just created.

Pubnub.subscribe({ channels: [$scope.msgChannel] });

Pubnub.addListener({ message: msgCallback }); Lastly, we define the sayIt() function, which takes the audio URL returned by the IBM Watson text-to-speech service and plays it using the HTML5 audio object. So easy!

$scope.sayIt = function (url) {

var audioElement = $("#theAudio")[0];

audioElement.src = url;

audioElement.play();

};

We mustn’t forget close out the HTML tags accordingly.

}); </script> </body> </html>

Not too shabby for about eighty lines of HTML & JavaScript!

Additional Features

There are a couple other features worth mentioning in the IBM Watson Text-to-Speech API.

const queryParams = {

accept: 'audio/wav',

voice: 'en-US_AllisonVoice',

text: request.message.text,

'watson-token': watsonToken.token

};

request.message.speech = apiUrl + '?' + query.stringify(queryParams);

You can find detailed API documentation here.

- accept is the MIME type of the audio stream you’d like to use, including WAV, OGG, FLAC, and more.

- voice is the voice you’d like to use: depending on the supported language, there may be one or more voices (male/female variants) available.

All in all, we found it pretty easy to get started with Text-to-Speech using the API!

Conclusion

Thank you so much for joining us in the Text-To-Speech article of our IBM Watson cognitive services series! Hopefully it’s been a useful experience learning about language-enabled technologies. In future articles, we’ll dive deeper into the IBM Watson APIs and use cases for language translation and sentiment analysis in realtime web applications.

Stay tuned, and please reach out anytime if you feel especially inspired or need any help!